This is the multi-page printable view of this section. Click here to print.

AgileTV CDN Solutions

- 1: Components

- 1.1: AgileTV CDN Director (esb3024)

- 1.1.1: Getting Started

- 1.1.2: Installation

- 1.1.2.1: Installing a 1.22 release

- 1.1.2.1.1: Configuration changes between 1.20 and 1.22

- 1.1.2.2: Installing a 1.20 release

- 1.1.2.2.1: Configuration changes between 1.18 and 1.20

- 1.1.2.3: Installing a 1.18 release

- 1.1.2.3.1: Configuration changes between 1.16 and 1.18

- 1.1.2.4: Installing a 1.16 release

- 1.1.2.4.1: Configuration changes between 1.14 and 1.16

- 1.1.2.5: Installing a 1.14 release

- 1.1.2.6: Installing a 1.12 release

- 1.1.2.7: Installing release 1.10.x

- 1.1.2.8: Installing release 1.8.0

- 1.1.2.9: Installing release 1.6.0

- 1.1.3: Firewall

- 1.1.4: API Overview

- 1.1.5: Configuration

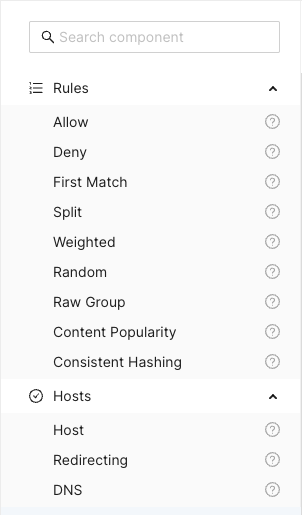

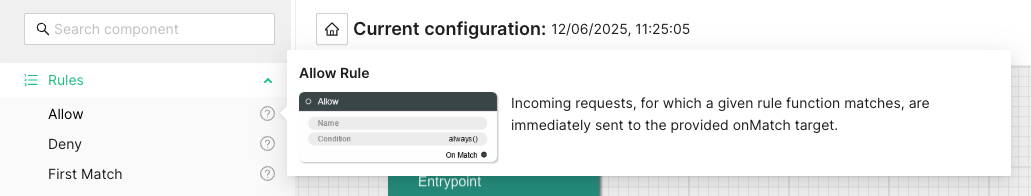

- 1.1.5.1: WebUI Configuration

- 1.1.5.2: OLD WebUI Configuration

- 1.1.5.3: Confd and Confcli

- 1.1.5.4: Session Groups and Classification

- 1.1.5.5: Accounts

- 1.1.5.6: Data streams

- 1.1.5.7: Selection Input Configurations

- 1.1.5.8: Advanced features

- 1.1.5.8.1: Content popularity

- 1.1.5.8.2: Consistent Hashing

- 1.1.5.8.3: Security token verification

- 1.1.5.8.4: Subnets API

- 1.1.5.8.5: Lua Features

- 1.1.5.8.5.1: Built-in Lua Functions

- 1.1.5.8.5.2: Global Lua Tables

- 1.1.5.8.5.3: Request Translation Function

- 1.1.5.8.5.4: Session Translation Function

- 1.1.5.8.5.5: Host Request Translation Function

- 1.1.5.8.5.6: Response Translation Function

- 1.1.5.8.5.7: Sending HTTP requests from translation functions

- 1.1.5.9: Trusted proxies

- 1.1.5.10: Confd Auto Upgrade Tool

- 1.1.6: Operations

- 1.1.6.1: Services

- 1.1.6.2: Geographic Databases

- 1.1.7: Convoy Bridge

- 1.1.8: Monitoring

- 1.1.8.1: Access logging

- 1.1.8.2: System troubleshooting

- 1.1.8.3: Scraping data with Prometheus

- 1.1.8.4: Visualizing data with Grafana

- 1.1.8.4.1: Managing Grafana

- 1.1.8.4.2: Grafana Dashboards

- 1.1.8.5: Alarms and Alerting

- 1.1.8.6: Monitoring multiple routers

- 1.1.8.7: Routing Rule Evaluation Metrics

- 1.1.8.8: Metrics

- 1.1.8.8.1: Internal Metrics

- 1.1.9: Releases

- 1.1.9.1: Release esb3024-1.22.0

- 1.1.9.2: Release esb3024-1.20.1

- 1.1.9.3: Release esb3024-1.18.0

- 1.1.9.4: Release esb3024-1.16.0

- 1.1.9.5: Release esb3024-1.14.2

- 1.1.9.6: Release esb3024-1.14.0

- 1.1.9.7: Release esb3024-1.12.1

- 1.1.9.8: Release esb3024-1.12.0

- 1.1.9.9: Release esb3024-1.10.2

- 1.1.9.10: Release esb3024-1.10.1

- 1.1.9.11: Release esb3024-1.10.0

- 1.1.9.12: Release esb3024-1.8.0

- 1.1.9.13: Release esb3024-1.6.0

- 1.1.9.14: Release esb3024-1.4.0

- 1.1.9.15: Release acd-router-1.2.3

- 1.1.9.16: Release acd-router-1.2.0

- 1.1.9.17: Release acd-router-1.0.0

- 1.1.10: Glossary

- 1.2: AgileTV Account Aggregator (esb3032)

- 1.2.1: Getting Started

- 1.2.2: Releases

- 1.2.2.1: Release esb3032-0.2.0

- 1.2.2.2: Release esb3032-1.0.0

- 1.2.2.3: Release esb3032-1.2.1

- 1.2.2.4: Release esb3032-1.4.0

- 1.3: AgileTV CDN Manager (esb3027)

- 1.3.1: Getting Started

- 1.3.2: System Requirements Guide

- 1.3.3: Architecture Guide

- 1.3.4: Installation Guide

- 1.3.4.1: Overview

- 1.3.4.2: Requirements

- 1.3.4.3: Quick Start Guide

- 1.3.4.4: Installation Guide

- 1.3.4.5: Upgrade Guide

- 1.3.4.6: Post Installation Guide

- 1.3.5: Configuration Guide

- 1.3.6: Networking

- 1.3.7: Storage Guide

- 1.3.8: Metrics and Monitoring

- 1.3.9: Operations Guide

- 1.3.10: Releases

- 1.3.10.1: Release esb3027-1.4.1

- 1.3.10.2: Release esb3027-1.4.0

- 1.3.10.3: Release esb3027-1.2.1

- 1.3.10.4: Release esb3027-1.2.0

- 1.3.10.5: Release esb3027-1.0.0

- 1.3.11: API Guides

- 1.3.11.1: Healthcheck API

- 1.3.11.2: Authentication API

- 1.3.11.3: Router API

- 1.3.11.4: Selection Input API

- 1.3.11.5: Operator UI API

- 1.3.12: Use Cases

- 1.3.12.1: Custom Deployments

- 1.3.13: Common Issues

- 1.3.14: Troubleshooting Guide

- 1.3.15: Glossary

- 1.4: AgileTV Cache (esb2001,esb3004)

- 1.5: BGP Sniffer (esb3013)

- 1.6: AgileTV Convoy Manager (classic) (esb3006)

- 1.7: Orbit CDN Request Router (esb3008)

- 2: Introduction

- 3: Solutions

- 3.1: Cache hardware metrics: monitoring and routing

- 3.2: Private CDN Offload Routing with DNS

- 3.3: Token blocking

- 3.4: Monitor ACD with Prometheus, Grafana and Alert Manager

- 4: Use Cases

- 4.1: Route on GeoIP/ASN

- 4.2: Route on Subnet

- 4.3: Route on Content Type

- 4.4: Route on Content Popularity

- 4.5: Route on Selection Input

- 4.6: Adapt to multi-CDN

- 4.7: How to use ACD router for EDNS routing

- 4.8: How to use ESB3024 Router with Orbit Request Router

- 4.9: How to use ESB3024 Router with CoreDNS

- 4.10: How to use the Director as a replacement for Orbit Request Router

- 4.11: Use In-Stream Sessions

- 5: Ready for Production?

- 5.1: Setup for Redundancy

- 5.2: HTTPS Certificates

1 - Components

1.1 - AgileTV CDN Director (esb3024)

1.1.1 - Getting Started

The Director serves as a versatile network service designed to redirect incoming HTTP(s) requests to the optimal host or Content Delivery Network (CDN) by evaluating various request properties through a set of rules. Although requests can be generic, the primary focus centers around audio-video content delivery. The rule engine allows users to construct routing configurations using predefined blocks, providing for the creation of intricate routing logic. This modular approach allows the users to tailor and streamline the content delivery process to meet their specific needs. The Director’s flexible rule engine takes into account factors such as geographical location, server load, content type, and other metadata from external sources to intelligently route incoming requests. It supports dynamic adjustments to seamlessly adapt to changing network conditions, ensuring efficient and reliable content delivery. The Director improves the overall user experience by delivering content from the most suitable and responsive sources, thereby reducing latency and enhancing performance.

Requirements

Hardware

The Director is designed to be installed and operated on commodity hardware, ensuring accessibility for a broad range of users. The minimum hardware specifications are as follows:

- CPU: x86-64 AMD or Intel with at least 2 cores.

- Memory: At least 2 GB free at runtime.

Operating System Compatibility

The Director is officially supported on Red Hat Enterprise Linux 8 or 9 or any

compatible operating system. In order to run the service, a minimum CPU

architecture of x86-64-v2 is required. This can be determined by running the

following command. If supported, it will be listed as “(supported)” in the

output.

/usr/lib64/ld-linux-x86-64.so.2 --help | grep x86-64-v2

External Internet access is necessary during the installation process for the installer to download and install additional dependencies. This ensures a seamless setup and optimal functionality of the Director on Red Hat Enterprise Linux 8 or 9. It’s worth noting that, due to the unique workings of the DNF package manager in Red Hat Enterprise Linux with rolling package streams, an air-gapped installation process is not available.

Firewall Recommendations

See Firewall.

Installation

See Installation.

Operations

See Operations.

Configuration Process

Once the router is operational, it requires a valid configuration before it can route incoming requests.

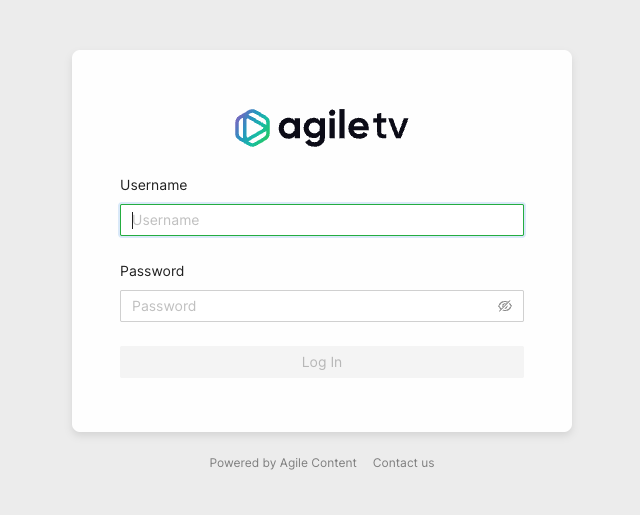

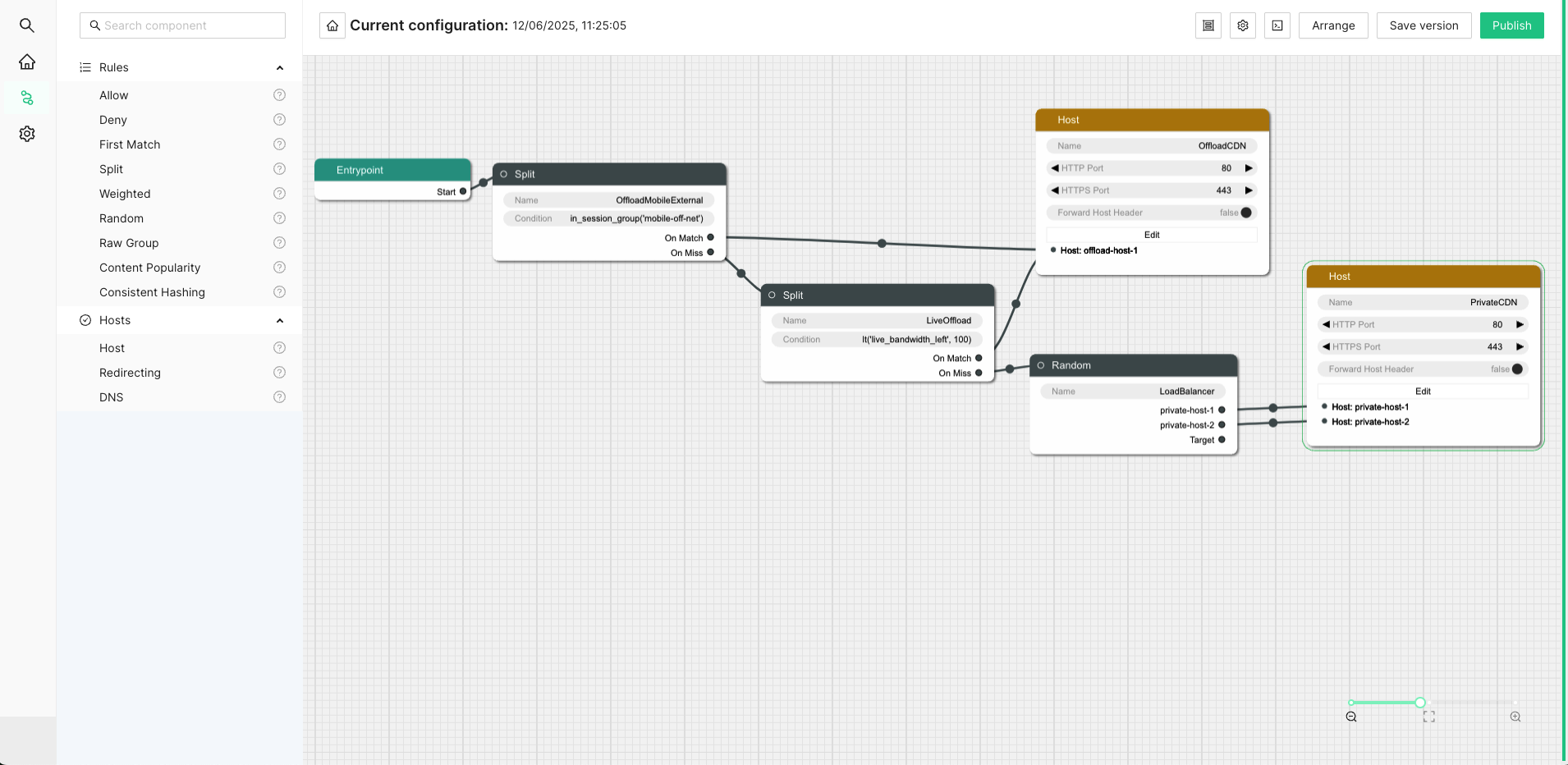

There are currently three methods available for configuring the router, each catering to different levels of complexity. The first is a Web UI, suitable for the most common use-cases, providing an intuitive interface for configuration. The second involves utilizing a confd REST service, complemented by an optional command line tool, confcli, suitable for all but the most advanced scenarios. The third method involves leveraging an internal REST API, ideal for the most intricate cases where using confd proves to be less flexible. It’s essential to note that as the configuration method advances through these levels, both flexibility and complexity increase, providing users with tailored options based on their specific needs and expertise.

API Key Management

Regardless of the method used to configure the system, a unique API key is

crucial for safeguarding the router’s configuration and preventing unauthorized

access to the API. This key must be supplied when interacting with the API.

During the router software installation, an automatically generated API key is

created and can be located on the installed system at

/opt/edgeware/acd/router/cache/rest-api-key.json. The structure of this file

is as follows:

{"api_key": "abc123"}

When accessing the internal configuration API, the key must be included in the

X-API-key header of the request, as shown below:

curl -v -k -H "X-API-Key: abc123" https://<router-host.example>:5001/v2/configuration

Modification to the authentication key and behavior can be done through the

/v2/rest_api_key endpoint. To change the key, a PUT request with a JSON body

of the same structure can be sent to the endpoint:

curl -v -k -X PUT -T new-key.json -H "X-API-Key: abc123" \

-H "Content-Type: application/json" https://<router-host.example>:5001/v2/rest_api_key

Additionally, key authentication can be disabled completely by sending a DELETE

request to the endpoint:

curl -v -k -X DELETE -H "X-API-Key: abc123" \

https://<router-host.example>:5001/v2/rest_api_key

In the event of a lost or forgotten authentication key, it can always be

retrieved at /opt/edgeware/acd/router/cache/rest-api-key.json on the

machine running the router. It is critical to emphasize that the API key should

remain private to prevent unauthorized access to the internal API, as it grants

full access to the router’s configuration.

Configuration Basics

Upon completing the installation process and configuring the API keys, the subsequent section will provide guidance on configuring the router to route all incoming requests to a single host. For straightforward CDN Offload use cases, there is a web based user interface described here.

For further details on configuring the router using confd and confcli, please consult the Confd documentation.

The initial step involves defining the target host group. In this illustration,

a singular group named all will be established, comprising two hosts.

$ confcli services.routing.hostGroups -w

Running wizard for resource 'hostGroups'

Hint: Hitting return will set a value to its default.

Enter '?' to receive the help string

hostGroups : [

hostGroup can be one of

1: dns

2: host

3: redirecting

Choose element index or name: host

Adding a 'host' element

hostGroup : {

name (default: ): all

type (default: host):

httpPort (default: 80):

httpsPort (default: 443):

hosts : [

host : {

name (default: ): host1.example.com

hostname (default: ): host1.example.com

ipv6_address (default: ):

}

Add another 'host' element to array 'hosts'? [y/N]: y

host : {

name (default: ): host2.example.com

hostname (default: ): host2.example.com

ipv6_address (default: ):

}

Add another 'host' element to array 'hosts'? [y/N]: n

]

}

Add another 'hostGroup' element to array 'hostGroups'? [y/N]: n

]

Generated config:

{

"hostGroups": [

{

"name": "all",

"type": "host",

"httpPort": 80,

"httpsPort": 443,

"hosts": [

{

"name": "host1.example.com",

"hostname": "host1.example.com",

"ipv6_address": ""

},

{

"name": "host2.example.com",

"hostname": "host2.example.com",

"ipv6_address": ""

}

]

}

]

}

Merge and apply the config? [y/n]:

After defining the host group, the next step is to establish a rule that directs

incoming requests to the designated host. In this example, a sole rule named

random will be generated, ensuring that all incoming requests are consistently

routed to the previously defined host.

$ confcli services.routing.rules -w

Running wizard for resource 'rules'

Hint: Hitting return will set a value to its default.

Enter '?' to receive the help string

rules : [

rule can be one of

1: allow

2: consistentHashing

3: contentPopularity

4: deny

5: firstMatch

6: random

7: rawGroup

8: rawHost

9: split

10: weighted

Choose element index or name: random

Adding a 'random' element

rule : {

name (default: ): random

type (default: random):

targets : [

target (default: ): host1.example.com

Add another 'target' element to array 'targets'? [y/N]: y

target (default: ): host2.example.com

Add another 'target' element to array 'targets'? [y/N]: n

]

}

Add another 'rule' element to array 'rules'? [y/N]: n

]

Generated config:

{

"rules": [

{

"name": "random",

"type": "random",

"targets": [

"host1.example.com",

"host2.example.com"

]

}

]

}

Merge and apply the config? [y/n]:

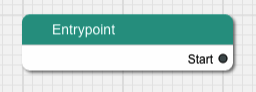

The last essential step involves instructing the router on which rule should

serve as the entry point into the routing tree. In this example, we designate

the rule random as the entrypoint for the routing process.

$ confcli services.routing.entrypoint random

services.routing.entrypoint = 'random'

Once this configuration is defined, all incoming requests will initiate their

traversal through the routing rules, starting with the rule named random. This

rule is designed to consistently match for every incoming request, effectively load

balancing evenly between host1.example.com and host2.example.com on port 80

or 443, depending on whether the initial request was made using HTTP or HTTPS.

Integration with Convoy

The router is equipped with the capability to synchronize specific configuration metadata with a separate Convoy installation through the integrated convoy-bridge service. However, this service necessitates additional setup and configuration, and you can find comprehensive details on the process here..

Additional Resources

Additional documentation resources are included with the Director and can be

accessed at the following directory: /opt/edgeware/acd/documentation/. This

directory contains supplementary materials to provide users with comprehensive

information and guidance for optimizing their experience with the Director.

Ready for Production

Once the Director software is completely installed and configured, there are a few additional considerations before moving to a full production environment. See the section Ready for Production for additional information.

1.1.2 - Installation

1.1.2.1 - Installing a 1.22 release

To install ESB3024 Router, you first need to copy the installation ISO image to

the target node where the router will be run. Due to the way the installer

operates, it is necessary that the host is reachable by ssh from itself for

the user account that will perform the installation, and that this user has

sudo access.

Prerequisites:

Ensure that the current user has

sudoaccess.sudo -lIf the above command fails, you may need to add the user to the

/etc/sudoersfile.Ensure that the installer has ssh access to

localhost.If using the

rootuser, thePermitRootLoginproperty of the/etc/ssh/sshd_configfile must be set to ‘yes’.Ensure that

sshpassis installed.If the installer is run by the root user, this step is not necessary.

sshpassis installed by typing this:sudo dnf install -y sshpass

Assuming the installation ISO image is in the current working directory,

the following steps need to be executed either by root user or with sudo.

Mount the installation ISO image under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-1.22.0.iso /mnt/acdRun the installer script.

/mnt/acd/installerIf it is not running as root, the installer will ask both for the “SSH password” and the “BECOME password”. The “SSH password” is the password that the user running the installer uses to log in to the local machine, and the “BECOME password” is the password for the user to gain

sudoaccess. They are usually the same.

Upgrading From an Earlier ESB3024 Router Release

The following steps can be taken to upgrade the router from a 1.10 or later release to 1.22.0. If upgrading from an earlier release it is recommended to first upgrade to 1.10.1 and then to upgrade to 1.22.0.

The upgrade procedure for the router is performed by taking a backup of the configuration, installing the new release of the router, and applying the saved configuration.

With the router running, save a backup of the configuration.

The exact procedure to accomplish this depends on the current method of configuration, e.g. if

confdis used, then the configuration should be extracted fromconfd, but if the REST API is used directly, then the configuration must be saved by fetching the current configuration snapshot using the REST API.Extracting the configuration using

confdis the recommend approach where available.confcli | tee config_backup.jsonTo extract the configuration from the REST API, the following may be used instead. Depending on the version of the router used, an API-Key may be required to fetch from the REST API.

curl --insecure https://localhost:5001/v2/configuration \ | tee config_backup.jsonIf the API Key is required, it can be found in the file

/opt/edgeware/acd/router/cache/rest-api-key.jsonand can be passed to the API by setting the value of theX-API-Keyheader.curl --insecure -H "X-API-Key: 1234abcd" \ https://localhost:5001/v2/configuration \ | tee config_backup.jsonMount the new installation ISO under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-1.22.0.iso /mnt/acdStop the router and all associated services.

Before upgrading the router it needs to be stopped, which can be done by typing this:

systemctl stop 'acd-*'Run the installer script.

/mnt/acd/installerPlease note that the installer will install new container images, but it will not remove the old ones. The old images can be removed manually after the upgrade is complete.

Migrate the configuration.

Note that this step only applies if the router is configured using

confd. If it is configured using the REST API, this step is not necessary.The confd configuration used in the previous versions is not directly compatible with 1.22, and may need to be converted. If this is not done, the configuration will not be valid and it will not be possible to make configuration changes.

The

acd-confd-migrationtool will automatically apply any necessary schema migrations. Further details about this tool can be found at Confd Auto Upgrade Tool.The tool takes as input the old configuration file, either by reading the file directly, or by reading from standard input, applies any necessary migrations between the two specified versions, and outputs a new configuration to standard output which is suitable for being applied to the upgraded system. While the tool has the ability to migrate between multiple versions at a time, the earliest supported version is 1.10.1.

The example below shows how to upgrade from 1.20.1. If upgrading from 1.18.0,

--from 1.20.1should be replaced with--from 1.18.0.The command line required to run the tool is different depending on which esb3024 release it is run on. On 1.22.0 it is run like this:

cat config_backup.json | \ podman run -i --rm \ images.edgeware.tv/acd-confd-migration:1.22.0 \ --in - --from 1.20.1 --to 1.22.0 \ | tee config_upgraded.jsonAfter running the above command, apply the new configuration to

confdby runningcat config_upgraded.json | confcli -i.

Troubleshooting

If there is a problem running the installer, additional debug information can

be output by adding -v or -vv or -vvv to the installer command, the

more “v” characters, the more detailed output.

1.1.2.1.1 - Configuration changes between 1.20 and 1.22

Confd configuration changes

Below are the changes to the confd configuration between versions 1.20 and 1.22 listed.

Removed services.routing.settings.usageLog.enabled

The services.routing.settings.usageLog.enabled setting has been removed. The

usage log is always enabled and this setting is no longer necessary.

Replaced forwardHostHeader with headersToForward

The services.routing.hostGroups.<name>.forwardHostHeader setting has been

replaced with services.routing.hostGroups.<name>.headersToForward, which is a

list of headers to forward to the origin server.

See CDNs and Hosts for more information.

Added selectionInputFetchBase

The integration.manager.selectionInputFetchBase setting has been added. It is

used to configure the base URL for fetching initial selection input from the

manager. See Selection Input Configurations for more information.

Added the requestHeader classifier

A new classifier, requestHeader, has been added. See Session

Classification for more

information.

Added patternSource to the subnet classifier

The subnet classifier has been extended with a new setting, patternSource.

See Session Classification for more

information.

1.1.2.2 - Installing a 1.20 release

To install ESB3024 Router, you first need to copy the installation ISO image

to the target node where the router will be run. Due to the way the

installer operates, it is necessary that the host is reachable by

password-less SSH from itself for the user account that will perform the

installation, and that this user has sudo access.

Prerequisites:

Ensure that the current user has

sudoaccess.sudo -lIf the above command fails, you may need to add the user to the

/etc/sudoersfile.Ensure that the installer has password-less SSH access to

localhost.If using the

rootuser, thePermitRootLoginproperty of the/etc/ssh/sshd_configfile must be set to ‘yes’.The local host key must also be included in the

.ssh/authorized_keysfile of the user running the installer. That can be done by issuing the following as the intended user:mkdir -m 0700 -p ~/.ssh ssh-keyscan localhost >> ~/.ssh/authorized_keysNote! The

ssh-keyscanutility will result in the key fingerprint being output on the console. As a security best-practice it is recommended to verify that this host-key matches the machine’s true SSH host key. As an alternative, to thisssh-keyscanapproach, establishing an SSH connection to localhost and accepting the host key will have the same result.Disable SELinux.

The Security-Enhanced Linux Project (SELinux) is designed to add an additional layer of security to the operating system by enforcing a set of rules on processes. Unfortunately out of the box the default configuration is not compatible with the way the installer operates. Before proceeding with the installation, it is recommended to disable SELinux. It can be re-enabled after the installation completes, if desired, but will require manual configuration. Refer to the Red Hat Customer Portal for details.

To check if SELinux is enabled:

getenforceThis will result in one of 3 states, “Enforcing”, “Permissive” or “Disabled”. If the state is “Enforcing” use the following to disable SELinux. Either “Permissive” or “Disabled” is required to continue.

setenforce 0This disables SELinux, but does not make the change persistent across reboots. To do that, edit the

/etc/selinux/configfile and set theSELINUXproperty todisabled.It is recommended to reboot the computer after changing SELinux modes, but the changes should take effect immediately.

Assuming the installation ISO image is in the current working directory,

the following steps need to be executed either by root user or with sudo.

Mount the installation ISO image under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-1.20.1.iso /mnt/acdRun the installer script.

/mnt/acd/installer

Upgrading From an Earlier ESB3024 Router Release

The following steps can be taken to upgrade the router from a 1.10 or later release to 1.20.1. If upgrading from an earlier release it is recommended to first upgrade to 1.10.1 and then to upgrade to 1.20.1.

The upgrade procedure for the router is performed by taking a backup of the configuration, installing the new release of the router, and applying the saved configuration.

With the router running, save a backup of the configuration.

The exact procedure to accomplish this depends on the current method of configuration, e.g. if

confdis used, then the configuration should be extracted fromconfd, but if the REST API is used directly, then the configuration must be saved by fetching the current configuration snapshot using the REST API.Extracting the configuration using

confdis the recommend approach where available.confcli | tee config_backup.jsonTo extract the configuration from the REST API, the following may be used instead. Depending on the version of the router used, an API-Key may be required to fetch from the REST API.

curl --insecure https://localhost:5001/v2/configuration \ | tee config_backup.jsonIf the API Key is required, it can be found in the file

/opt/edgeware/acd/router/cache/rest-api-key.jsonand can be passed to the API by setting the value of theX-API-Keyheader.curl --insecure -H "X-API-Key: 1234abcd" \ https://localhost:5001/v2/configuration \ | tee config_backup.jsonMount the new installation ISO under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-1.20.1.iso /mnt/acdStop the router and all associated services.

Before upgrading the router it needs to be stopped, which can be done by typing this:

systemctl stop 'acd-*'Run the installer script.

/mnt/acd/installerPlease note that the installer will install new container images, but it will not remove the old ones. The old images can be removed manually after the upgrade is complete.

Migrate the configuration.

Note that this step only applies if the router is configured using

confd. If it is configured using the REST API, this step is not necessary.The confd configuration used in the previous versions is not directly compatible with 1.20, and may need to be converted. If this is not done, the configuration will not be valid and it will not be possible to make configuration changes.

The

acd-confd-migrationtool will automatically apply any necessary schema migrations. Further details about this tool can be found at Confd Auto Upgrade Tool.The tool takes as input the old configuration file, either by reading the file directly, or by reading from standard input, applies any necessary migrations between the two specified versions, and outputs a new configuration to standard output which is suitable for being applied to the upgraded system. While the tool has the ability to migrate between multiple versions at a time, the earliest supported version is 1.10.1.

The example below shows how to upgrade from 1.10.2. If upgrading from 1.14.0,

--from 1.10.2should be replaced with--from 1.14.0.The command line required to run the tool is different depending on which esb3024 release it is run on. On 1.20.1 it is run like this:

cat config_backup.json | \ podman run -i --rm \ images.edgeware.tv/acd-confd-migration:1.20.1 \ --in - --from 1.10.2 --to 1.20.1 \ | tee config_upgraded.jsonAfter running the above command, apply the new configuration to

confdby runningcat config_upgraded.json | confcli -i.

Troubleshooting

If there is a problem running the installer, additional debug information can

be output by adding -v or -vv or -vvv to the installer command, the

more “v” characters, the more detailed output.

1.1.2.2.1 - Configuration changes between 1.18 and 1.20

Confd configuration changes

Below are the changes to the confd configuration between versions 1.18 and 1.20 listed.

Added Kafka bootstrap server settings

The integration.kafka section has been added. It only contains

bootstrapServers, which is a list of Kafka bootstrap servers that the router

may connect to. The Kafka settings are described in the Data streams

section.

Added data streams settings

The services.routing.dataStreams section has been added. It contains

configuration for incoming and outgoing data streams in the incoming and

outgoing sections. See Data streams

for more information.

Added allowAnyRedirectType setting

A new setting, services.routing.hostGroups.<name>.allowAnyRedirectType, has

been added. It makes the Director interpret any 3xx response from a redirecting

as a redirect. See

CDNs and Hosts for more

information.

1.1.2.3 - Installing a 1.18 release

To install ESB3024 Router, one first needs to copy the installation ISO image

to the target node where the router will be run. Due to the way the

installer operates, it is necessary that the host is reachable by

password-less SSH from itself for the user account that will perform the

installation, and that this user has sudo access.

Prerequisites:

Ensure that the current user has

sudoaccess.sudo -lIf the above command fails, you may need to add the user to the

/etc/sudoersfile.Ensure that the installer has password-less SSH access to

localhost.If using the

rootuser, thePermitRootLoginproperty of the/etc/ssh/sshd_configfile must be set to ‘yes’.The local host key must also be included in the

.ssh/authorized_keysfile of the user running the installer. That can be done by issuing the following as the intended user:mkdir -m 0700 -p ~/.ssh ssh-keyscan localhost >> ~/.ssh/authorized_keysNote! The

ssh-keyscanutility will result in the key fingerprint being output on the console. As a security best-practice it is recommended to verify that this host-key matches the machine’s true SSH host key. As an alternative, to thisssh-keyscanapproach, establishing an SSH connection to localhost and accepting the host key will have the same result.Disable SELinux.

The Security-Enhanced Linux Project (SELinux) is designed to add an additional layer of security to the operating system by enforcing a set of rules on processes. Unfortunately out of the box the default configuration is not compatible with the way the installer operates. Before proceeding with the installation, it is recommended to disable SELinux. It can be re-enabled after the installation completes, if desired, but will require manual configuration. Refer to the Red Hat Customer Portal for details.

To check if SELinux is enabled:

getenforceThis will result in one of 3 states, “Enforcing”, “Permissive” or “Disabled”. If the state is “Enforcing” use the following to disable SELinux. Either “Permissive” or “Disabled” is required to continue.

setenforce 0This disables SELinux, but does not make the change persistent across reboots. To do that, edit the

/etc/selinux/configfile and set theSELINUXproperty todisabled.It is recommended to reboot the computer after changing SELinux modes, but the changes should take effect immediately.

Assuming the installation ISO image is in the current working directory,

the following steps need to be executed either by root user or with sudo.

Mount the installation ISO image under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-1.18.0.iso /mnt/acdRun the installer script.

/mnt/acd/installer

Upgrading From an Earlier ESB3024 Router Release

The following steps can be taken to upgrade the router from a 1.10 or later release to 1.18.0. If upgrading from an earlier release it is recommended to first upgrade to 1.10.1 and then to upgrade to 1.18.0.

The upgrade procedure for the router is performed by taking a backup of the configuration, installing the new release of the router, and applying the saved configuration.

With the router running, save a backup of the configuration.

The exact procedure to accomplish this depends on the current method of configuration, e.g. if

confdis used, then the configuration should be extracted fromconfd, but if the REST API is used directly, then the configuration must be saved by fetching the current configuration snapshot using the REST API.Extracting the configuration using

confdis the recommend approach where available.confcli | tee config_backup.jsonTo extract the configuration from the REST API, the following may be used instead. Depending on the version of the router used, an API-Key may be required to fetch from the REST API.

curl --insecure https://localhost:5001/v2/configuration \ | tee config_backup.jsonIf the API Key is required, it can be found in the file

/opt/edgeware/acd/router/cache/rest-api-key.jsonand can be passed to the API by setting the value of theX-API-Keyheader.curl --insecure -H "X-API-Key: 1234abcd" \ https://localhost:5001/v2/configuration \ | tee config_backup.jsonMount the new installation ISO under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-1.18.0.iso /mnt/acdStop the router and all associated services.

Before upgrading the router it needs to be stopped, which can be done by typing this:

systemctl stop 'acd-*'Run the installer script.

/mnt/acd/installerPlease note that the installer will install new container images, but it will not remove the old ones. The old images can be removed manually after the upgrade is complete.

Migrate the configuration.

Note that this step only applies if the router is configured using

confd. If it is configured using the REST API, this step is not necessary.The confd configuration used in the previous versions is not directly compatible with 1.18, and may need to be converted. If this is not done, the configuration will not be valid and it will not be possible to make configuration changes.

The

acd-confd-migrationtool will automatically apply any necessary schema migrations. Further details about this tool can be found at Confd Auto Upgrade Tool.The tool takes as input the old configuration file, either by reading the file directly, or by reading from standard input, applies any necessary migrations between the two specified versions, and outputs a new configuration to standard output which is suitable for being applied to the upgraded system. While the tool has the ability to migrate between multiple versions at a time, the earliest supported version is 1.10.1.

The example below shows how to upgrade from 1.10.2. If upgrading from 1.14.0,

--from 1.10.2should be replaced with--from 1.14.0.The command line required to run the tool is different depending on which esb3024 release it is run on. On 1.18.0 it is run like this:

cat config_backup.json | \ podman run -i --rm \ images.edgeware.tv/acd-confd-migration:1.18.0 \ --in - --from 1.10.2 --to 1.18.0 \ | tee config_upgraded.jsonAfter running the above command, apply the new configuration to

confdby runningcat config_upgraded.json | confcli -i.

Troubleshooting

If there is a problem running the installer, additional debug information can

be output by adding -v or -vv or -vvv to the installer command, the

more “v” characters, the more detailed output.

1.1.2.3.1 - Configuration changes between 1.16 and 1.18

Confd Configuration Changes

Below are the changes to the confd configuration between versions 1.16 and 1.18 listed.

Added Content Popularity Settings

The services.routing.settings.contentPopularity section has got the following

new settings.

popularityListMaxSizescoreBasedtimeBased

The new settings are described in the content popularity section.

1.1.2.4 - Installing a 1.16 release

To install ESB3024 Router, one first needs to copy the installation ISO image

to the target node where the router will be run. Due to the way the

installer operates, it is necessary that the host is reachable by

password-less SSH from itself for the user account that will perform the

installation, and that this user has sudo access.

Prerequisites:

Ensure that the current user has

sudoaccess.sudo -lIf the above command fails, you may need to add the user to the

/etc/sudoersfile.Ensure that the installer has password-less SSH access to

localhost.If using the

rootuser, thePermitRootLoginproperty of the/etc/ssh/sshd_configfile must be set to ‘yes’.The local host key must also be included in the

.ssh/authorized_keysfile of the user running the installer. That can be done by issuing the following as the intended user:mkdir -m 0700 -p ~/.ssh ssh-keyscan localhost >> ~/.ssh/authorized_keysNote! The

ssh-keyscanutility will result in the key fingerprint being output on the console. As a security best-practice it is recommended to verify that this host-key matches the machine’s true SSH host key. As an alternative, to thisssh-keyscanapproach, establishing an SSH connection to localhost and accepting the host key will have the same result.Disable SELinux.

The Security-Enhanced Linux Project (SELinux) is designed to add an additional layer of security to the operating system by enforcing a set of rules on processes. Unfortunately out of the box the default configuration is not compatible with the way the installer operates. Before proceeding with the installation, it is recommended to disable SELinux. It can be re-enabled after the installation completes, if desired, but will require manual configuration. Refer to the Red Hat Customer Portal for details.

To check if SELinux is enabled:

getenforceThis will result in one of 3 states, “Enforcing”, “Permissive” or “Disabled”. If the state is “Enforcing” use the following to disable SELinux. Either “Permissive” or “Disabled” is required to continue.

setenforce 0This disables SELinux, but does not make the change persistent across reboots. To do that, edit the

/etc/selinux/configfile and set theSELINUXproperty todisabled.It is recommended to reboot the computer after changing SELinux modes, but the changes should take effect immediately.

Assuming the installation ISO image is in the current working directory,

the following steps need to be executed either by root user or with sudo.

Mount the installation ISO image under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-1.16.0.iso /mnt/acdRun the installer script.

/mnt/acd/installer

Upgrade from and earlier ESB3024 Router release

The following steps can be taken to upgrade the router from a 1.10 or later release to 1.16.0. If upgrading from an earlier release it is recommended to first upgrade to 1.10.1 and then to upgrade to 1.16.0.

The upgrade procedure for the router is performed by taking a backup of the configuration, installing the new release of the router, and applying the saved configuration.

With the router running, save a backup of the configuration.

The exact procedure to accomplish this depends on the current method of configuration, e.g. if

confdis used, then the configuration should be extracted fromconfd, but if the REST API is used directly, then the configuration must be saved by fetching the current configuration snapshot using the REST API.Extracting the configuration using

confdis the recommend approach where available.confcli | tee config_backup.jsonTo extract the configuration from the REST API, the following may be used instead. Depending on the version of the router used, an API-Key may be required to fetch from the REST API.

curl --insecure https://localhost:5001/v2/configuration \ | tee config_backup.jsonIf the API Key is required, it can be found in the file

/opt/edgeware/acd/router/cache/rest-api-key.jsonand can be passed to the API by setting the value of theX-API-Keyheader.curl --insecure -H "X-API-Key: 1234abcd" \ https://localhost:5001/v2/configuration \ | tee config_backup.jsonMount the new installation ISO under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-1.16.0.iso /mnt/acdStop the router and all associated services.

Before upgrading the router it needs to be stopped, which can be done by typing this:

systemctl stop 'acd-*'Run the installer script.

/mnt/acd/installerMigrate the configuration.

Note that this step only applies if the router is configured using

confd. If it is configured using the REST API, this step is not necessary.The confd configuration used in the previous versions is not directly compatible with 1.16, and may need to be converted. If this is not done, the configuration will not be valid and it will not be possible to make configuration changes.

The

acd-confd-migrationtool will automatically apply any necessary schema migrations. Further details about this tool can be found at Confd Auto Upgrade Tool.The tool takes as input the old configuration file, either by reading the file directly, or by reading from standard input, applies any necessary migrations between the two specified versions, and outputs a new configuration to standard output which is suitable for being applied to the upgraded system. While the tool has the ability to migrate between multiple versions at a time, the earliest supported version is 1.10.1.

The example below shows how to upgrade from 1.10.2. If upgrading from 1.14.0,

--from 1.10.2should be replaced with--from 1.14.0.The command line required to run the tool is different depending on which esb3024 release it is run on. On 1.16.0 it is run like this:

cat config_backup.json | \ podman run -i --rm \ images.edgeware.tv/acd-confd-migration:1.16.0 \ --in - --from 1.10.2 --to 1.16.0 \ | tee config_upgraded.jsonAfter running the above command, apply the new configuration to

confdby runningcat config_upgraded.json | confcli -i.

Troubleshooting

If there is a problem running the installer, additional debug information can

be output by adding -v or -vv or -vvv to the installer command, the

more “v” characters, the more detailed output.

1.1.2.4.1 - Configuration changes between 1.14 and 1.16

Confd configuration changes

Below are the changes to the confd configuration between versions 1.14 and 1.16 listed.

Added region GeoIP classifier

Classifiers of type geoip now have a region property.

Added integration.routing.gui configuration

There is now an integration.routing.gui section which will be used by the

GUI.

Added services.routing.accounts configuration

The services.routing.accounts list has been added to the configuration.

1.1.2.5 - Installing a 1.14 release

To install ESB3024 Router, one first needs to copy the installation ISO image

to the target node where the router will be run. Due to the way the

installer operates, it is necessary that the host is reachable by

password-less SSH from itself for the user account that will perform the

installation, and that this user has sudo access.

Prerequisites:

Ensure that the current user has

sudoaccess.sudo -lIf the above command fails, you may need to add the user to the

/etc/sudoersfile.Ensure that the installer has password-less SSH access to

localhost.If using the

rootuser, thePermitRootLoginproperty of the/etc/ssh/sshd_configfile must be set to ‘yes’.The local host key must also be included in the

.ssh/authorized_keysfile of the user running the installer. That can be done by issuing the following as the intended user:mkdir -m 0700 -p ~/.ssh ssh-keyscan localhost >> ~/.ssh/authorized_keysNote! The

ssh-keyscanutility will result in the key fingerprint being output on the console. As a security best-practice it is recommended to verify that this host-key matches the machine’s true SSH host key. As an alternative, to thisssh-keyscanapproach, establishing an SSH connection to localhost and accepting the host key will have the same result.Disable SELinux.

The Security-Enhanced Linux Project (SELinux) is designed to add an additional layer of security to the operating system by enforcing a set of rules on processes. Unfortunately out of the box the default configuration is not compatible with the way the installer operates. Before proceeding with the installation, it is recommended to disable SELinux. It can be re-enabled after the installation completes, if desired, but will require manual configuration. Refer to the Red Hat Customer Portal for details.

To check if SELinux is enabled:

getenforceThis will result in one of 3 states, “Enforcing”, “Permissive” or “Disabled”. If the state is “Enforcing” use the following to disable SELinux. Either “Permissive” or “Disabled” is required to continue.

setenforce 0This disables SELinux, but does not make the change persistent across reboots. To do that, edit the

/etc/selinux/configfile and set theSELINUXproperty todisabled.It is recommended to reboot the computer after changing SELinux modes, but the changes should take effect immediately.

Assuming the installation ISO image is in the current working directory,

the following steps need to be executed either by root user or with sudo.

Mount the installation ISO image under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-1.14.0.iso /mnt/acdRun the installer script.

/mnt/acd/installer

Upgrade from and earlier ESB3024 Router release

The following steps can be used to upgrade the router from a 1.10 or 1.12 release to 1.14.0. If upgrading from an earlier release it is recommended to first upgrade to 1.10.1 in multiple steps; for instance when upgrading from release 1.8.0 to 1.14.0, it is recommended to first upgrade to 1.10.1 and then to 1.14.0.

The upgrade procedure for the router is performed by taking a backup of the configuration, installing the new release of the router, and applying the saved configuration.

With the router running, save a backup of the configuration.

The exact procedure to accomplish this depends on the current method of configuration, e.g. if

confdis used, then the configuration should be extracted fromconfd, but if the REST API is used directly, then the configuration must be saved by fetching the current configuration snapshot using the REST API.Extracting the configuration using

confdis the recommend approach where available.confcli | tee config_backup.jsonTo extract the configuration from the REST API, the following may be used instead. Depending on the version of the router used, an API-Key may be required to fetch from the REST API.

curl --insecure https://localhost:5001/v2/configuration \ | tee config_backup.jsonIf the API Key is required, it can be found in the file

/opt/edgeware/acd/router/cache/rest-api-key.jsonand can be passed to the API by setting the value of theX-API-Keyheader.curl --insecure -H "X-API-Key: 1234abcd" \ https://localhost:5001/v2/configuration \ | tee config_backup.jsonMount the new installation ISO under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-1.14.0.iso /mnt/acdStop the router and all associated services.

Before upgrading the router it needs to be stopped, which can be done by typing this:

systemctl stop 'acd-*'Run the installer script.

/mnt/acd/installerMigrate the configuration.

Note that this step only applies if the router is configured using

confd. If it is configured using the REST API, this step is not necessary.The confd configuration used in the previous versions is not directly compatible with 1.14, and may need to be converted. If this is not done, the configuration will not be valid and it will not be possible to make configuration changes.

The

acd-confd-migrationtool will automatically apply any necessary schema migrations. Further details about this tool can be found at Confd Auto Upgrade Tool.The tool takes as input the old configuration file, either by reading the file directly, or by reading from standard input, applies any necessary migrations between the two specified versions, and outputs a new configuration to standard output which is suitable for being applied to the upgraded system. While the tool has the ability to migrate between multiple versions at a time, the earliest supported version is 1.10.1.

The example below shows how to upgrade from 1.10.2. If upgrading from 1.12.0,

--from 1.10.2should be replaced with--from 1.12.0.The command line required to run the tool is different depending on which esb3024 release it is run on. On 1.14.0 it is run like this:

cat config_backup.json | \ podman run -i --rm \ images.edgeware.tv/acd-confd-migration:1.14.0 \ --in - --from 1.10.2 --to 1.14.0 \ | tee config_upgraded.jsonAfter running the above command, apply the new configuration to

confdby runningcat config_upgraded.json | confcli -i.

Troubleshooting

If there is a problem running the installer, additional debug information can

be output by adding -v or -vv or -vvv to the installer command, the

more “v” characters, the more detailed output.

1.1.2.5.1 - Configuration changes between 1.12.1 and 1.14

Confd configuration changes

Below are the changes to the confd configuration between versions 1.12.1 and 1.14.x listed.

Renamed services.routing.settings.allowedProxies

The configuration setting services.routing.settings.allowedProxies has been

renamed to services.routing.settings.trustedProxies.

Added services.routing.tuning.general.restApiMaxBodySize

This parameter configures the maximum body size for the REST API. It mainly applies to the configuration, which sometimes has a large payload size.

1.1.2.6 - Installing a 1.12 release

To install ESB3024 Router, one first needs to copy the installation ISO image

to the target node where the router will be run. Due to the way the

installer operates, it is necessary that the host is reachable by

password-less SSH from itself for the user account that will perform the

installation, and that this user has sudo access.

Prerequisites:

Ensure that the current user has

sudoaccess.sudo -lIf the above command fails, you may need to add the user to the

/etc/sudoersfile.Ensure that the installer has password-less SSH access to

localhost.If using the

rootuser, thePermitRootLoginproperty of the/etc/ssh/sshd_configfile must be set to ‘yes’.The local host key must also be included in the

.ssh/authorized_keysfile of the user running the installer. That can be done by issuing the following as the intended user:mkdir -m 0700 -p ~/.ssh ssh-keyscan localhost >> ~/.ssh/authorized_keysNote! The

ssh-keyscanutility will result in the key fingerprint being output on the console. As a security best-practice its recommended to verify that this host-key matches the machine’s true SSH host key. As an alternative, to thisssh-keyscanapproach, establishing an SSH connection to localhost and accepting the host key will have the same result.Disable SELinux.

The Security-Enhanced Linux Project (SELinux) is designed to add an additional layer of security to the operating system by enforcing a set of rules on processes. Unfortunately out of the box the default configuration is not compatible with the way the installer operates. Before proceeding with the installation, it is recommended to disable SELinux. It can be re-enabled after the installation completes, if desired, but will require manual configuration. Refer to the Red Hat Customer Portal for details.

To check if SELinux is enabled:

getenforceThis will result in one of 3 states, “Enforcing”, “Permissive” or “Disabled”. If the state is “Enforcing” use the following to disable SELinux. Either “Permissive” or “Disabled” is required to continue.

setenforce 0This disables SELinux, but does not make the change persistent across reboots. To do that, edit the

/etc/selinux/configfile and set theSELINUXproperty todisabled.It is recommended to reboot the computer after changing SELinux modes, but the changes should take effect immediately.

Assuming the installation ISO image is in the current working directory,

the following steps need to be executed either by root user or with sudo.

Mount the installation ISO image under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-esb3024-1.12.1.iso /mnt/acdRun the installer script.

/mnt/acd/installer

Upgrade from ESB3024 Router release 1.10

The following steps can be used to upgrade the router from a 1.10 release to 1.12.0 or 1.12.1. If upgrading from an earlier release it is recommended to perform the upgrade in multiple steps; for instance when upgrading from release 1.8.0 to 1.12.1, it is recommended to first upgrade to 1.10.1 or 1.10.2 and then to 1.12.1.

The upgrade procedure for the router is performed by taking a backup of the configuration, installing the new release of the router, and applying the saved configuration.

With the router running, save a backup of the configuration.

The exact procedure to accomplish this depends on the current method of configuration, e.g. if

confdis used, then the configuration should be extracted fromconfd, but if the REST API is used directly, then the configuration must be saved by fetching the current configuration snapshot using the REST API.Extracting the configuration using

confdis the recommend approach where available.confcli | tee config_backup.jsonTo extract the configuration from the REST API, the following may be used instead. Depending on the version of the router used, an API-Key may be required to fetch from the REST API.

curl --insecure https://localhost:5001/v2/configuration \ | tee config_backup.jsonIf the API Key is required, it can be found in the file

/opt/edgeware/acd/router/cache/rest-api-key.jsonand can be passed to the API by setting the value of theX-API-Keyheader.curl --insecure -H "X-API-Key: 1234abcd" \ https://localhost:5001/v2/configuration \ | tee config_backup.jsonMount the new installation ISO under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-esb3024-1.12.1.iso /mnt/acdStop the router and all associated services.

Before upgrading the router it needs to be stopped, which can be done by typing this:

systemctl stop 'acd-*'Run the installer script.

/mnt/acd/installerMigrate the configuration.

Note that this step only applies if the router is configured using

confd. If it is configured using the REST API, this step is not necessary.The confd configuration used in the 1.10 versions is not directly compatible with 1.12, and may need to be converted. If this is not done, the configuration will not be valid and it will not be possible to make configuration changes.

To help with migrating the configuration, a new tool has been included in the 1.12.0 release, which will automatically apply any necessary schema migrations. Further details about this tool can be found here. Confd Auto Upgrade.

The

confd-auto-upgradetool takes as input the old configuration file, either by reading the file directly, or by reading from standard input, applies any necessary migrations between the two specified versions, and outputs a new configuration to standard output which is suitable for being applied to the upgraded system. While the tool has the ability to migrate between multiple versions at a time, the earliest supported version is 1.10.1.The example below shows how to upgrade from 1.10.2. If upgrading from 1.10.1,

--from 1.10.2should be replaced with--from 1.10.1.The command line required to run the tool is different if it is run on esb3024-1.12.0 or esb3024-1.12.1. On 1.12.1 it is run like this:

cat config_backup.json | \ podman run -i --rm \ images.edgeware.tv/auto-upgrade-esb3024-1.12.1-master:20240702T151205Z-f1b53a98f \ --in - --from 1.10.2 --to 1.12.1 \ | tee config_upgraded.jsonOn esb3024-1.12.0 it is run like this:

cat config_backup.json | \ podman run -i --rm \ images.edgeware.tv/auto-upgrade-esb3024-1.12.0:20240619T154952Z-2b72f7400 \ --in - --from 1.10.2 --to 1.12.0 \ | tee config_upgraded.jsonAfter running the above command, apply the new configuration to

confdby runningcat config_upgraded.json | confcli -i.

Troubleshooting

If there is a problem running the installer, additional debug information can

be output by adding -v or -vv or -vvv to the installer command, the

more “v” characters, the more detailed output.

1.1.2.6.1 - Configuration changes between 1.10.2 and 1.12

Confd configuration changes

Below are the major changes to the confd configuration between versions 1.10.2 and 1.12.0/1.12.1. Note that there are no configuration changes between versions 1.12.0 and 1.12.1, so the differences apply to both.

Added services.routing.translationFunctions.hostRequest

A new translation function has been added which will allow custom Lua code to modify requests to backend hosts before they are sent.

Added services.routing.translationFunctions.session

A new translation function has been added which will allow custom Lua code to be executed after the router has made the routing decision but before generating the redirect URL.

An example use case would be for enabling instream sessions, which can be done by

setting this value to return set_session_type('instream').

Removed services.routing.settins.managedSessions configuration

This configuration is no longger used.

Added services.routing.tuning.general.maxActiveManagedSessions tuning parameter.

This parameter configures the maximum number of active managed sessions.

1.1.2.7 - Installing release 1.10.x

To install ESB3024 Router, one first needs to copy the installation ISO image

to the target node where the router will be run. Due to the way the

installer operates, it is necessary that the host is reachable by

password-less SSH from itself for the user account that will perform the

installation, and that this user has sudo access.

Prerequisites:

Ensure that the current user has

sudoaccess.sudo -lIf the above command fails, you may need to add the user to the

/etc/sudoersfile.Ensure that the installer has password-less SSH access to

localhost.If using the

rootuser, thePermitRootLoginproperty of the/etc/ssh/sshd_configfile must be set to ‘yes’.The local host key must also be included in the

.ssh/authorized_keysfile of the user running the installer. That can be done by issuing the following as the intended user:mkdir -m 0700 -p ~/.ssh ssh-keyscan localhost >> ~/.ssh/authorized_keysNote! The

ssh-keyscanutility will result in the key fingerprint being output on the console. As a security best-practice its recommended to verify that this host-key matches the machine’s true SSH host key. As an alternative, to thisssh-keyscanapproach, establishing an SSH connection to localhost and accepting the host key will have the same result.Disable SELinux.

The Security-Enhanced Linux Project (SELinux) is designed to add an additional layer of security to the operating system by enforcing a set of rules on processes. Unfortunately out of the box the default configuration is not compatible with the way the installer operates. Before proceeding with the installation, it is recommended to disable SELinux. It can be re-enabled after the installation completes, if desired, but will require manual configuration. Refer to the Red Hat Customer Portal for details.

To check if SELinux is enabled:

getenforceThis will result in one of 3 states, “Enforcing”, “Permissive” or “Disabled”. If the state is “Enforcing” use the following to disable SELinux. Either “Permissive” or “Disabled” is required to continue.

setenforce 0This disables SELinux, but does not make the change persistent across reboots. To do that, edit the

/etc/selinux/configfile and set theSELINUXproperty todisabled.It is recommended to reboot the computer after changing SELinux modes, but the changes should take effect immediately.

Assuming the installation ISO image is in the current working directory,

the following steps need to be executed either by root user or with sudo.

Mount the installation ISO image under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-esb3024-1.10.1.iso /mnt/acdRun the installer script.

/mnt/acd/installer

Upgrade from ESB3024 Router release 1.8.0

The following steps can be used to upgrade the router from release 1.8.0 to 1.10.x. If upgrading from an earlier release it is recommended to perform the upgrade in multiple steps; for instance when upgrading from release 1.6.0 to 1.10.x, it is recommended to first upgrade to 1.8.0 and then to 1.10.x.

The upgrade procedure for the router is performed by taking a backup of the configuration, installing the new release of the router, and applying the saved configuration.

With the router running, save a backup of the configuration.

The exact procedure to accomplish this depends on the current method of configuration, e.g. if

confdis used, then the configuration should be extracted fromconfd, but if the REST API is used directly, then the configuration must be saved by fetching the current configuration snapshot using the REST API.Extracting the configuration using

confdis the recommend approach where available.confcli | tee config_backup.jsonTo extract the configuration from the REST API, the following may be used instead. Depending on the version of the router used, an API-Key may be required to fetch from the REST API.

curl --insecure https://localhost:5001/v2/configuration \ | tee config_backup.jsonIf the API Key is required, it can be found in the file

/opt/edgeware/acd/router/cache/rest-api-key.jsonand can be passed to the API by setting the value of theX-API-Keyheader.curl --insecure -H "X-API-Key: 1234abcd" \ https://localhost:5001/v2/configuration \ | tee config_backup.jsonMount the new installation ISO under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-esb3024-1.10.1.iso /mnt/acdStop the router and all associated services.

Before upgrading the router it needs to be stopped, which can be done by typing this:

systemctl stop 'acd-*'Run the installer script.

/mnt/acd/installerMigrate the configuration.

Note that this step only applies if the router is configured using

confd. If it is configured using the REST API, this step is not necessary.The confd configuration used in version 1.8.0 is not directly compatible with 1.10.x, and may need to be converted. If this is not done, the configuration will not be valid and it will not be possible to make configuration changes.

To determine if the configuration needs to be converted,

confclican be run like below. If it prints error messages, the configuration needs to be converted. If no error messages are printed, the configuration is valid and no further updates are necessary.confcli | head -n5 [2024-04-02 14:48:37,155] [ERROR] Missing configuration key /integration [2024-04-02 14:48:37,162] [ERROR] Missing configuration key /services/routing/settings/qoeTracking [2024-04-02 14:48:37,222] [ERROR] Missing configuration key /services/routing/hostGroups/convoy-rr/hosts/convoy-rr-1/healthChecks [2024-04-02 14:48:37,222] [ERROR] Missing configuration key /services/routing/hostGroups/convoy-rr/hosts/convoy-rr-2/healthChecks [2024-04-02 14:48:37,242] [ERROR] Missing configuration key /services/routing/hostGroups/e-dns/hosts/linton-dns-1/healthChecks { "integration": { "convoy": { "bridge": { "accounts": {If error messages are printed, the configuration needs to be converted. If the configuration was saved in the file

config_backup.json, the conversion can be done by typing this at the command line:sed -E -e '/"hosts":/,/]/ s/([[:space:]]+)("hostname":.*)/\1\2\n\1"healthChecks": [],/' -e '/"apiKey":/ d' config_backup.json | \ curl -s -X PUT -T - -H 'Content-Type: application/json' http://localhost:5000/config/__active/systemctl restart acd-confdThis adds empty

healthCheckssections to all hosts and removes theapiKeyconfiguration. After that,acd-confdis restarted. See Configuration changes between 1.8.0 and 1.10.x for more details about the configuration changes.Migrating configuration to esb3024-1.10.2

When upgrading to version 1.10.2, an extra step is required to migrate the consistent hashing configuration. This step is necessary both when upgrading from an earlier 1.10 release and when upgrading from older versions. It is only needed if consistent hashing was configured in the previous version.

To determine if consistent hashing was configured, execute the following command:

confcli | head -n2 [2024-05-31 09:43:55,932] [ERROR] Missing configuration key /services/routing/rules/constantine/hashAlgorithm { "integration": {If an error message about a missing configuration key appears, the configuration must be migrated. If no such error message appears, this step should be skipped.

To migrate the configuration, execute the following command at the command line:

curl -s http://localhost:5000/config/__active/ | \ sed -E 's/(.*)("type":.*"consistentHashing")(,?)/\1\2,\n\1"hashAlgorithm": "MD5"\3/' | \ curl -s -X PUT -T - -H 'Content-Type: application/json' http://localhost:5000/config/__active/This command will read the current configuration, add the

hashAlgorithmconfiguration key, and write back the updated configuration.Remove the Account Monitor container

Older versions of the router installed the Account Monitor tool. This was removed in release 1.8.0, but if it is still present and unused, it can be removed by typing:

podman rm account-monitorRemove the

confd-transformer.luafileAfter installing or upgrading to 1.10.x, ensure that the

confd-transformer.luascript located in/opt/edgeware/acd/router/lib/standard_luadirectory is removed.This file contains deprecated Lua language definitions which will override newer versions of those functions already present in the ACD Router’s Lua Standard Library. When upgrading beyond 1.10.2, the installer will automatically remove this file, however for this particular release, it requires manual intervention.

rm -f /opt/edgeware/acd/router/lib/standard_lua/confd-transformer.luaAfter removing this file, it will be necessary to restart the router to flush the definitions from the router’s memory:

systemctl restart acd-router

Troubleshooting

If there is a problem running the installer, additional debug information can

be output by adding -v or -vv or -vvv to the installer command, the

more “v” characters, the more detailed output.

1.1.2.7.1 - Configuration changes between 1.8.0 and 1.10.x

Confd configuration changes

Below are the major changes to the confd configuration between version 1.8.0 and 1.10.x listed.

Added integration.convoy section

An integration.convoy section has been added to the configuration. It is

currently used for configuring the Convoy Bridge

service.

Removed services.routing.apiKey configuration

The services.routing.apiKey configuration key has been removed. This was an

obsolete way of giving the configuration access to the router. The key has to be

removed from the configuration when upgrading, otherwise the configuration will

not be accepted.

Added services.routing.settings.qoeTracking

A services.routing.settings.qoeTracking section has been added to the

configuration.

Added healthChecks sections to the hosts

The hosts in the hostGroup entries have been extended with a healthChecks

key, which is a list of functions that determine if a host is in good health.

For example, a redirecting host might look like this after the configuration has been updated:

{

"services": {

"routing": {

"hostGroups": [

{

"name": "convoy-rr",

"type": "redirecting",

"httpPort": 80,

"httpsPort": 443,

"forwardHostHeader": true,

"hosts": [

{

"name": "convoy-rr-1",

"hostname": "convoy-rr-1",

"ipv6_address": "",

"healthChecks": [

"health_check('convoy-rr-1')"

]

}

]

}

],

Added hashAlgorithm to the consistentHashing rule

In esb3024-1.10.2 the consistentHashing routing rule has been extended with a

hashAlgorithm key, which can have the values MD5, SDBM and Murmur. The

default value is MD5.

1.1.2.8 - Installing release 1.8.0

To install ESB3024 Router, one first needs to copy the installation ISO image

to the target node where the router will be run. Due to the way the

installer operates, it is necessary that the host is reachable by

password-less SSH from itself for the user account that will perform the

installation, and that this user has sudo access.

Prerequisites:

Ensure that the current user has

sudoaccess.sudo -lIf the above command fails, you may need to add the user to the

/etc/sudoersfile.Ensure that the installer has password-less SSH access to

localhost.If using the

rootuser, thePermitRootLoginproperty of the/etc/ssh/sshd_configfile must be set to ‘yes’.The local host key must also be included in the

.ssh/authorized_keysfile of the user running the installer. That can be done by issuing the following as the intended user:mkdir -m 0700 -p ~/.ssh ssh-keyscan localhost >> ~/.ssh/authorized_keysNote! The

ssh-keyscanutility will result in the key fingerprint being output on the console. As a security best-practice its recommended to verify that this host-key matches the machine’s true SSH host key. As an alternative, to thisssh-keyscanapproach, establishing an SSH connection to localhost and accepting the host key will have the same result.Disable SELinux.

The Security-Enhanced Linux Project (SELinux) is designed to add an additional layer of security to the operating system by enforcing a set of rules on processes. Unfortunately out of the box the default configuration is not compatible with the way the installer operates. Before proceeding with the installation, it is recommended to disable SELinux. It can be re-enabled after the installation completes, if desired, but will require manual configuration. Refer to the Red Hat Customer Portal for details.

To check if SELinux is enabled:

getenforceThis will result in one of 3 states, “Enforcing”, “Permissive” or “Disabled”. If the state is “Enforcing” use the following to disable SELinux. Either “Permissive” or “Disabled” is required to continue.

setenforce 0It is recommended to reboot the computer after changing SELinux modes, but the changes should take effect immediately.

Assuming the installation ISO image is in the current working directory,

the following steps need to be executed either by root user or with sudo.

Mount the installation ISO image under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-esb3024-1.8.0.iso /mnt/acdRun the installer script.

/mnt/acd/installer

Upgrade

The upgrade procedure for the router is performed by taking a backup of the configuration, installing the new version of the router, and applying the saved configuration.

With the router running, save a backup of the configuration.

The exact procedure to accomplish this depends on the current method of configuration, e.g. if

confdis used, then the configuration should be extracted fromconfd, but if the REST API is used directly, then the configuration must be saved by fetching the current configuration snapshot using the REST API.Extracting the configuration using

confdis the recommend approach where available.confcli | tee config_backup.jsonTo extract the configuration from the REST API, the following may be used instead. Depending on the version of the router used, an API-Key may be required to fetch from the REST API.

curl --insecure https://localhost:5001/v2/configuration \ | tee config_backup.jsonIf the API Key is required, it can be found in the file

/opt/edgeware/acd/router/cache/rest-api-key.jsonand can be passed to the API by setting the value of theX-API-Keyheader.curl --insecure -H "X-API-Key: 1234abcd" \ https://localhost:5001/v2/configuration \ | tee config_backup.jsonMount the new installation ISO under

/mnt/acd.Note: The mount-point may be any accessible path, but

/mnt/acdwill be used throughout this document.mkdir -p /mnt/acd mount esb3024-acd-router-1.2.0.iso /mnt/acdStop the router and all associated services.

Before upgrading the router it needs to be stopped, which can be done by typing this:

systemctl stop 'acd-*'Run the installer script.

/mnt/acd/installerMigrate the configuration.

Note that this step only applies if the router is configured using

confd. If it is configured using the REST API, this step is not necessary.The confd configuration used in version 1.6.0 is not directly compatible with 1.8.0, and may need to have a few minor manual updates in order to be valid. If this is not done, the configuration will not be valid and it will not be possible to make configuration changes.

To determine if the configuration needs to be manually updated,

confclican be run like below. If it prints error messages, the configuration needs to be updated. If no error messages are printed, the configuration is valid and no further updates are necessary.confcli services.routing | head [2024-02-01 19:05:10,769] [ERROR] Missing configuration key /services/routing/hostGroups/convoy-rr/forwardHostHeader [2024-02-01 19:05:10,779] [ERROR] Missing configuration key /services/routing/hostGroups/e-dns/forwardHostHeader [2024-02-01 19:05:10,861] [ERROR] 'forwardHostHeader'If error messages are printed, a

forwardHostHeaderconfiguration needs to be added to thehostGroupsconfiguration. This can be done by running this at the command line:curl -s http://localhost:5000/config/__active/ | \ sed -E 's/([[:space:]]+)"type": "(host|redirecting|dns)"(,?)/\1"type": "\2",\n\1"forwardHostHeader": false\3/' | \ curl -s -X PUT -T - -H 'Content-Type: application/json' http://localhost:5000/config/__active/This reads the active configuration from the router, adds the “forwardHostHeader” configuration to all host groups, and then sends the updated configuration back to the router.

See Configuration changes between 1.6.0 and 1.8.0 for more details about the configuration changes.

Remove the Account Monitor container

Previous versions of the router installed the Account Monitor tool. This is no longer included, but since the previous version installed, there will be a stopped Account Monitor container. If it is not used, the container can be removed by typing:

podman rm account-monitor

Troubleshooting

If there is a problem running the installer, additional debug information can

be output by adding -v or -vv or -vvv to the installer command, the

more “v” characters, the more detailed output.

1.1.2.8.1 - Configuration changes between 1.6.0 and 1.8.0

Confd configuration changes

Below are some of the configuration changes between version 1.4.0 and 1.6.0 listed. The list only contains the changes that might affect already existing configuration, enirely new items are not listed. Normally nothing needs to be done about this since they will be upgraded automatically, but they are listed here for reference.

Added enabled to contentPopularity

An enabled key has been added to

services.routing.settings.contentPopularity. After the key has been added, the

configuration looks like this:

{

"services": {

"routing": {

"settings": {

"contentPopularity": {

"enabled": true,

"algorithm": "score_based",

"sessionGroupNames": []

},

...

Added selectionInputItemLimit to tuning

A selectionInputItemLimit key has been added to

services.routing.tuning.general. After the key has been added, the

configuration looks like this:

{

"services": {